Keecker Algorithms: Autonomous Navigation

Disclaimer: I currently work at Keecker, I wrote this article for the company blog.

To patrol in your home or go to his charging station, Keecker has to know its exact position and navigate safely. This involves various tasks from finding a way to the more complex high-level understanding of the real world as perceived by its sensors.

The research on those subjects started more than fifty years ago and they are still actively studied. But something has changed in the last years. Advances in sensors and processors developed for the mobile industry allowed consumer robots to embed the technology typically found in much more sophisticated robots. Navigating in an unpredictable environment is no longer reserved for Mars rovers!

Let’s find out how this is possible, and the steps to take it further.

What makes navigation a challenge for Keecker?

Although it looks easy for you to find your way in a home or an office, this task is more complicated than it looks like for a robot. It has to be described in a computational way, with algorithms. As we don’t usually think about how we are moving around, it’s not so natural to teach robots how to do it.

Robust methods have been developed for the industry, but they involve known environments with controlled conditions and limited interaction with people. Regardless of the resources used, moving in an unpredictable environment like a home or office isn’t completely solved.

Exploring an unknown environment is also known to be a chicken and egg problem. In order to build a map, you need to know your location so that you can register new sensor measurements. But in order to determine your current location, you need a representation of the environment relative to which you can locate yourself.

Keecker has to find its way, may it be in complete sunlight or during the night, with some carpets or chairs on its path. Although those variations sounds like a problem, it is also a great opportunity. Meeting those rich conditions will allow Keecker to improve much more than the robots tied to factories and warehouses.

Perception

Let’s start by a brief presentation of sensors packed in Keecker.

- A front-facing color camera.

- A 360° color camera on the top, seeing all around.

- A front-facing depth camera. Instead of measuring colors, each pixel measures a range based on the time a projected light took to get back. It can sense obstacles a few meters away, but range data may be wrong for semi-transparent, reflective or sunlit surfaces.

- Proximity sensors all around, sensing obstacles a few centimeters away.

- Magnetic encoders measuring the amount of rotation of the two drive wheels. The most significant error source is wheel slippage which depends on the floor material.

- An IMU (inertial measurement unit) consisting of an accelerometer, a gyrometer and a magnetometer.

Interestingly, all those sensors have been developed for recent high-end phones, but can be deviated from their original purpose. The next sections shows how Keecker uses them to navigate autonomously.

Movement planning

Keecker moves with two drive wheels. By controlling the speed of each one independently, it can go straight, turn on itself or follow circular trajectories.

Movement planning is the task to find how to execute those tiny trajectory elements one after another to reach a given destination, avoiding known and unexpected along the way.

Planning an optimal path, considering any possible command outcome at any given time with any possible sensor readings would be intractable — way too long to compute on Keecker to be useful. In our case finding the optimal solution is not very important anyway, we need to compute a path that is good enough. This allowed us to make some simplifications to the problem.

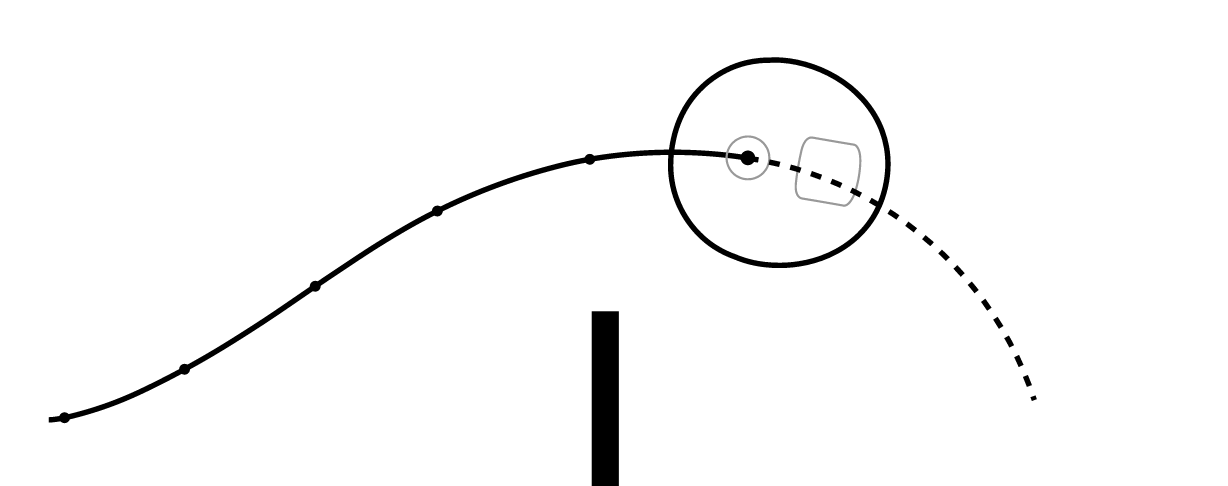

First, depth camera and proximity sensor readings are projected on a two-dimensional map, called an occupancy grid. This reduces the amount of data to be processed by the planning algorithms. It also allows to remember obstacles that are no longer perceived but likely still there.

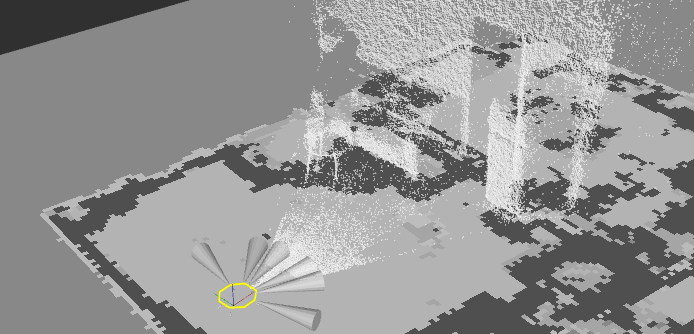

A 2D occupancy grid integrating real world obstacles sensed by the depth camera and the proximity sensors. Keecker, here represented by the yellow shape, is allowed to navigate through light cells and cannot cross dark cells.

Given this occupancy grid, we use a path finding algorithm called A* to compute a rough path to follow. This path takes known obstacles into account, but do not consider commands that will be needed to follow it.

The remaining problem is to generate the commands that will be needed to follow this path smoothly, while avoiding unexpected obstacles. Ten times per second, we randomly sample a set of possible commands given the current speed. For each of those commands, we simulate what would happen if they were executed. Then we select the best one that will follow the path while staying far enough of the obstacles.

- The rough path avoiding known obstacles is shown in light grey.

- The destination is indicated by the arrow at the en of the path.

- The randomdly sampled commands simulated by Keecker are shown in blue.

- The dashed line shows the one that best progresses on the path while staying away from obstacles, sent to the motors controller.

The selected command is finally sent to be executed by a dedicated microcontroller. This allows to safely execute control commands, even if the main processor is currently used by another application. Proximity sensors are read in real time by this microcontroller, avoiding to bump into obstacles that were not seen when the command was planned.

Localization and Mapping

To know where is each room and find a path, Keecker needs to build a representation of the environment. Roboticists usually call this task Simultaneous Localization And Mapping (SLAM), because in an unknown environment both have to be done at the same time. The problem is to make a usable map from sensors data that may not be reliable.

A first kind of sensor gives a relative positioning information. It indicates an amount of displacement, regardless of the surrounding environment. This is the kind of information you get while walking without seeing and hearing anything. This usually allows to have precise movement information constantly:

Wheel speed measured by encoders can give a pretty good estimate of the displacement. Angular velocities measured by the IMU can be integrated to estimate the amount of rotation. Linear accelerations measured by the IMU can be used to detect shocks, indicating that we may discard other sensor readings. By matching consecutive point clouds acquired by the depth camera, we can find the displacement that has been done between the two.

Over time, small errors in those measurements can result in a large error in the position estimate. This is why we also have to use absolute positioning information to correct the accumulated error. It is deduced from remarkable landmarks and features of the environment that are expected to stay stable over time.

Landmarks are specific places we can identify with a good confidence, like the charging station. Features are characteristics that may not be sufficient to identify the specific place, but give some clues. It can be obstacles looking like walls and furniture, the strength of the Wi-Fi signals around or the earth magnetic field.

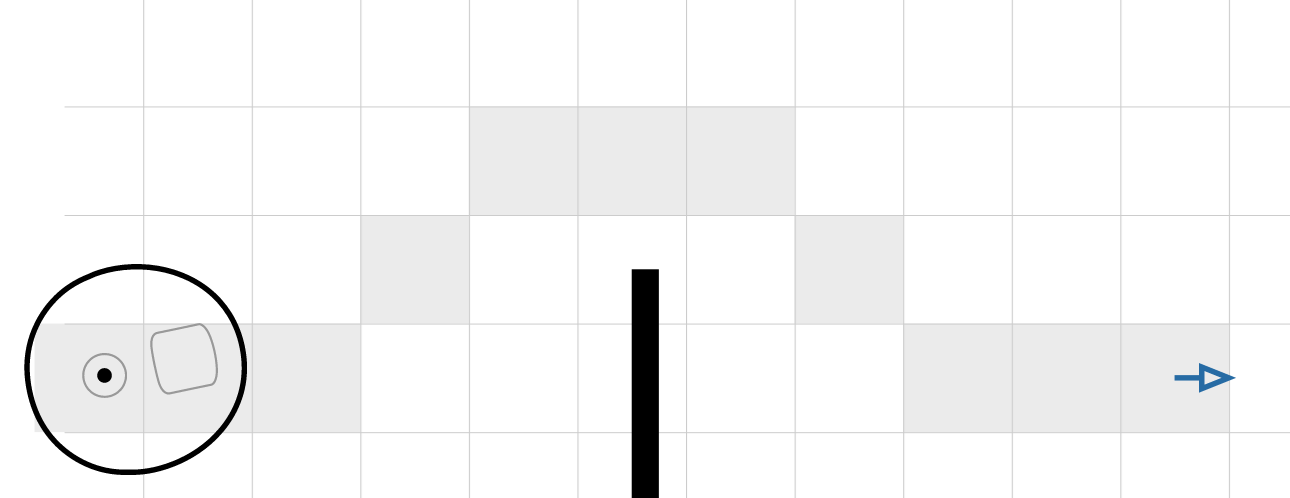

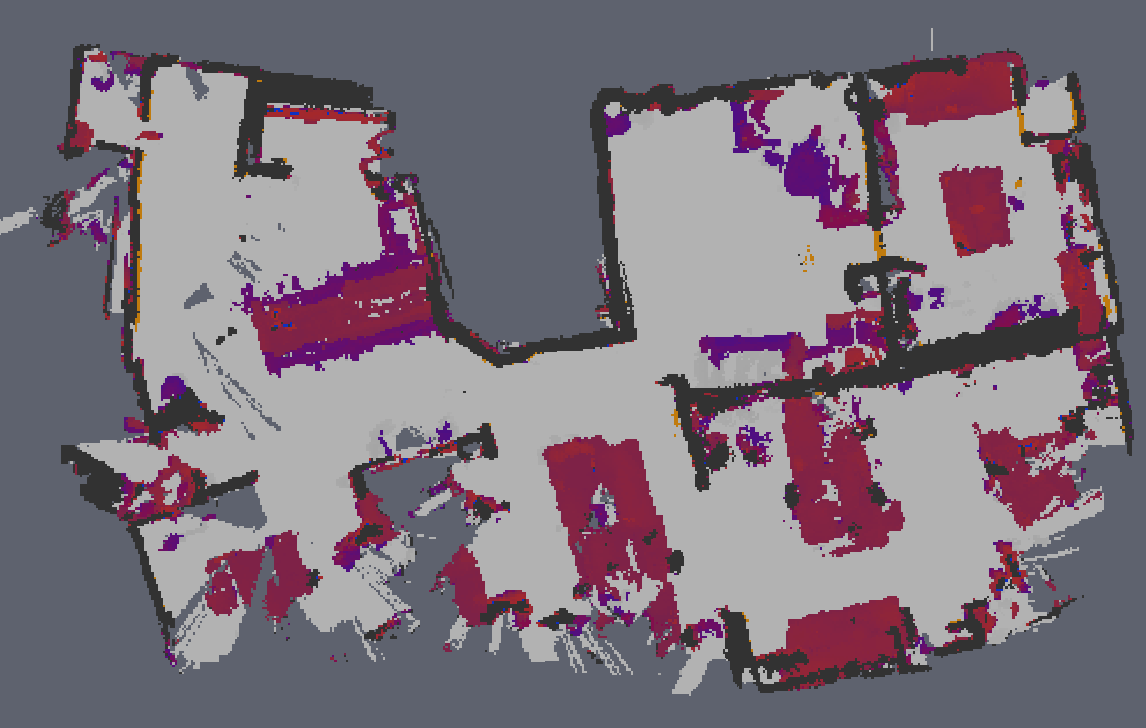

An example internal map made by Keecker. It shows obstacles features: walls (black), furniture (red), lower things (purple), freespace (light grey) and unknown areas (dark grey). This map also embed data not represented here, like Wi-Fi signals and the magnetic field.

All that information is noisy and cannot be trusted by itself. Probabilistic methods are often used to fuse them according to their reliability. Keecker uses an algorithm called a particle filter:

- Let’s say that Keecker is in an unknown environment, and that you just started a mapping.

- Keecker starts at an arbitrary initial position with an empty map. This is our first so-called particle. It carries a map with the current location with respect to it. Both the map and the location are hypotheses or guesses about the world surrounding Keecker.

- For each particle, it registers the currently available absolute features on the map: obstacles around, Wi-Fi signals and the magnetic field.

- When moving, for each particle, it uses relative position information to imagine possible new situations. If the odometry said that it moved twenty centimeters, Keecker will create multiple new particles, keeping the same map but moving its location within the expected odometry error range.

- For each particle, compute a score based on the consistency between the currently sensed obstacles, Wi-Fi signals and magnetic field with the one previously registered on the map.

- Keep the best particles and discard the others.

- Repeat from the third step (3.).

The number and the spreading of particles at any given time depends on the confidence of Keecker in its current guesses. It uses the best scored one as his best representation of the environment.

At the end of the mapping, we use what is called a loop closure algorithm to correct the map by matching reliable features that are not often seen, like the charging station.

What’s next

Those algorithms are running right now on every Keecker. Based on their real-world performance, we are working on the next software updates to enhance how Keecker understands his environment.

Continuous SLAM. For now the SLAM algorithm only runs when you perform the mapping of your house. This map is then used by a simplified version of the algorithm using the map previously done. We are working on a continuous mapping, so that changes in the environment will be continuously integrated on the map. This will also allow Keecker to add information such as the most frequently used pathways and the areas that are often cluttered and should be avoided.

Visual SLAM. We do not use RGB camera yet for the SLAM, mainly because it has to work in the dark. But when available, visual information can give a very good estimation of the absolute position. We are using feature detection algorithms to recognize previously seen places and object detection to define new landmarks like a sofa or a washing machine.